Object Detection with Mini Drones

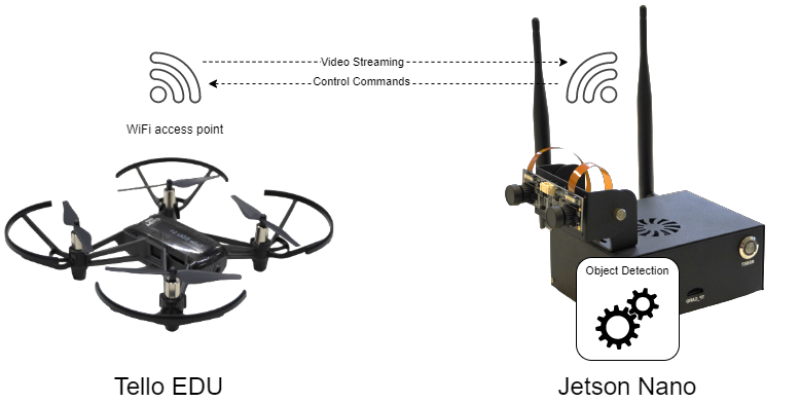

We use programmable mini-drones (Ryze Tello) as flying webcams. The video stream is analyzed in real time using a object detector on a NVIDIA Jetson Nano embedded computer. The AI object detector is the SSD-MobileNet-V2 single-shot detector pre-trained with the widely used COCO dataset.

| Object detection (open loop control) - Demo Video |

|---|

| Person tracking (closed loop control) - Demo Video |

|---|

How to set up the Object Detection Demo with Tello and Jetson

Go to Object Detection Demo

Basic Principles of Person Tracking Demo

Description

The Tello drone changes own angle to keep the detected person close to the center of camera viewpoint. If there are more than one person, the one is chosen, which is closer to the camera. The calculation of the closest person is done through detection area comparison in order to select the detection with the biggest area.

Used libraries

In order to control and detect objects a several Python libraries were used. To control the drone, the Tello library [1] was imported and applied to send basic movement and streaming commands. The object detection was implemented by using standard jetson.inference [2] module. The output of camera and key controls was implemented via OpenCV library [3].

PID Controller

To make the process of changing yaw angle smoother, the PID Controller mechanism was implemented in Python. The values of P and D were adjusted during experiment for the given image parameters. More about it you can read here https://en.wikipedia.org/wiki/PID_controller

The motivation for this experiment were the numerous public papers and Youtube videos. Particularly, the videos of Murtaza (https://youtube.com/playlist?list=PLMoSUbG1Q_r8ib2U4AbC_mPTsa-u9HoP_) were used as for PID implementation.

The code is published for open access on GitHub under the link: https://github.com/eligosoftware/ryzetellohsrw. The main python file is https://github.com/eligosoftware/ryzetellohsrw/blob/main/move_head.py

Update 17.02.2022:

The Jupyter Notebook implementation was added to make the programming process easier and comprehensive. Note that to run this notebook, the Docker container from https://github.com/harleylara/jetson-workshop should be downloaded and SSH connection be established to Jetson Nano. Moreover, Tello drone should be set to Access Point mode, i.e. connect to the existing Wifi and not create own Wifi Station. To enable Access Point (ap) mode, the command “ap wifiname wifipassword” should be sent to drone. In order to return to Station Mode, press and hold the power button on drone for 5 seconds. Read more about it here: https://dl.djicdn.com/downloads/RoboMaster+TT/Tello_SDK_3.0_User_Guide_en.pdf. The opening of notebook inside GUI of Jetson Nano caused memory and processor failures. The Jupyter notebook itself can be downloaded from https://github.com/eligosoftware/ryzetellohsrw/blob/main/detection.ipynb.

Update 23.02.2022:

Added forward and backward movement options to the notepad https://github.com/eligosoftware/ryzetellohsrw/blob/main/detection.ipynb. The calculation is based on the area of the detected person image. The drone tries to keep the “ideal” box area and depending on the difference between real and desired box values moves forth and back. Warning! The experiments should be done in closed area but with absence of obstacles for drone. Also added experimental feature of using objects for stopping and landing drone. The variables values should be adjusted for that.

External links

1. Tello library GitHub https://github.com/harleylara/tello-python

2. Jetson Inference GitHub https://github.com/dusty-nv/jetson-inference

3. OpenCV https://opencv.org/

More on Mini-Drones and Object Detection

ESP related projects

- ESP-Drone, official project by ESPRESSIF

- Meet ESPcopter, main website

Mini Drones

| 1. Educational Drone 1, Parrot Mambo (discontinued) | 2. Educational Drone 2, Ryze Tello EDU |